Optimal Policies for MDPs: Difference between revisions

Jump to navigation

Jump to search

No edit summary |

No edit summary |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 6: | Line 6: | ||

== Parameters == | == Parameters == | ||

$n$: number of states | |||

== Table of Algorithms == | == Table of Algorithms == | ||

| Line 27: | Line 27: | ||

[[File:Optimal Policies for MDPs - Time.png|1000px]] | [[File:Optimal Policies for MDPs - Time.png|1000px]] | ||

Latest revision as of 10:11, 28 April 2023

Description

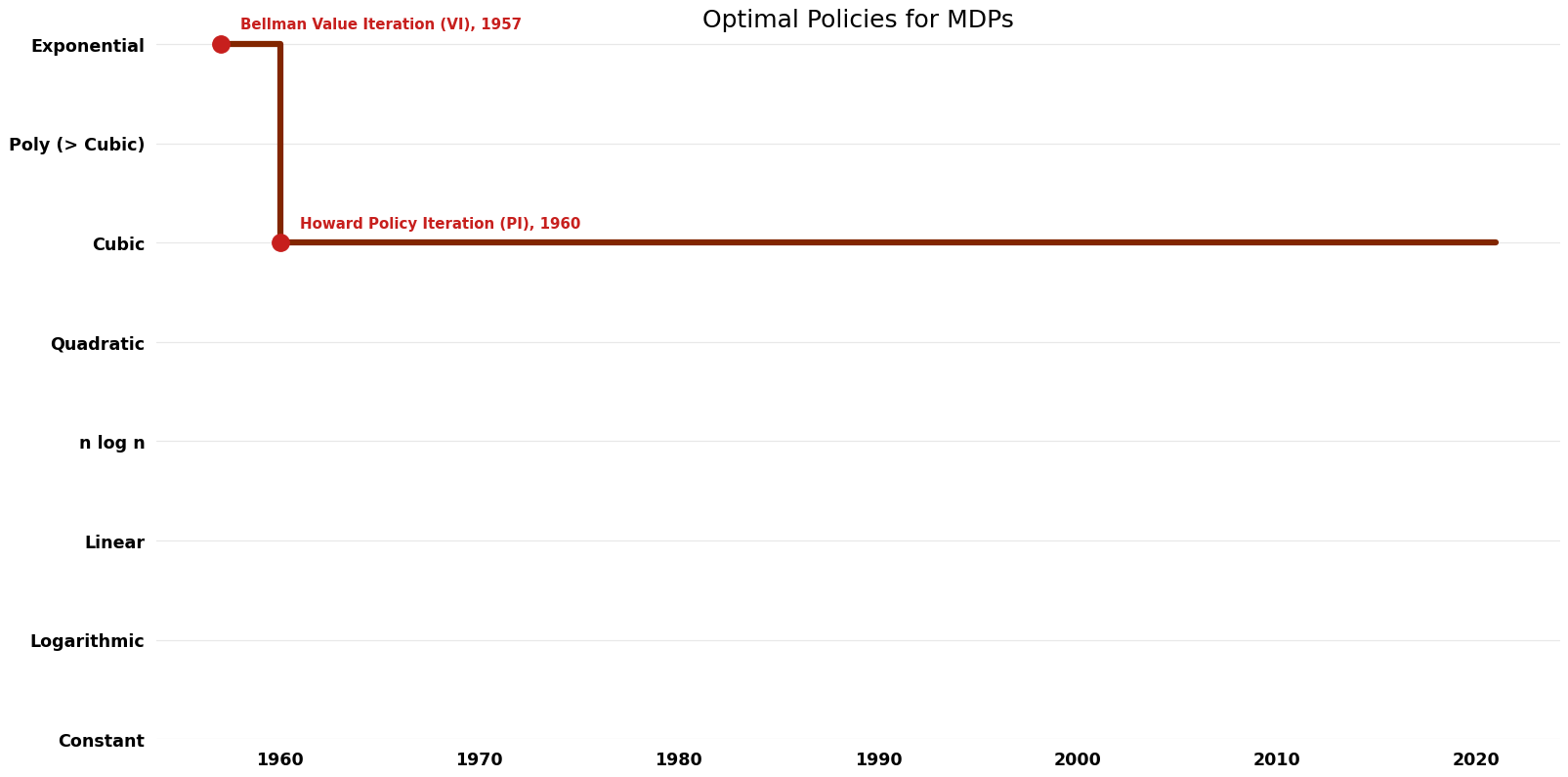

In an MDP, a policy is a choice of what action to choose at each state An Optimal Policy is a policy where you are always choosing the action that maximizes the “return”/”utility” of the current state. The problem here is to find such an optimal policy from a given MDP.

Parameters

$n$: number of states

Table of Algorithms

| Name | Year | Time | Space | Approximation Factor | Model | Reference |

|---|---|---|---|---|---|---|

| Bellman Value Iteration (VI) | 1957 | $O({2}^n)$ | $O(n)$ | Exact | Deterministic | Time |

| Howard Policy Iteration (PI) | 1960 | $O(n^{3})$ | $O(n)$ | Exact | Deterministic | Time |

| Puterman Modified Policy Iteration (MPI) | 1974 | $O(n^{3})$ | $O(n)$ | Exact | Deterministic |